InceptionNetV2

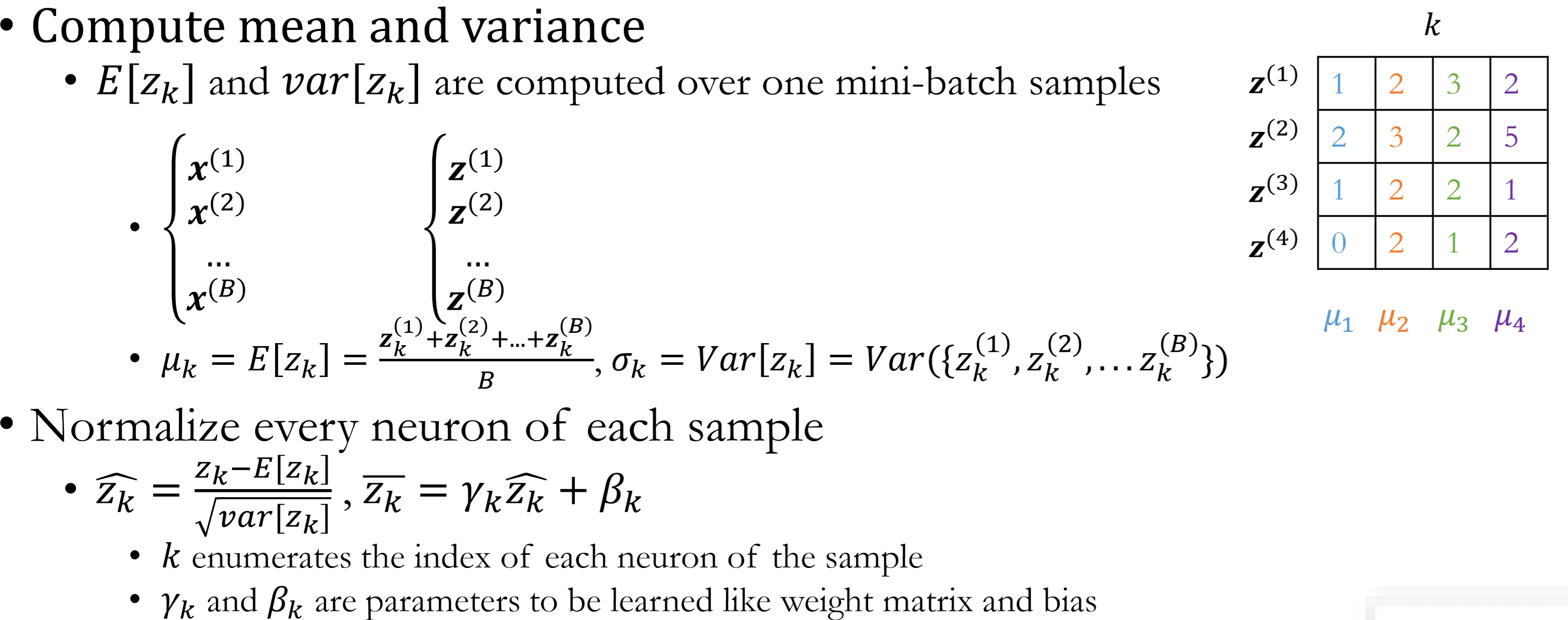

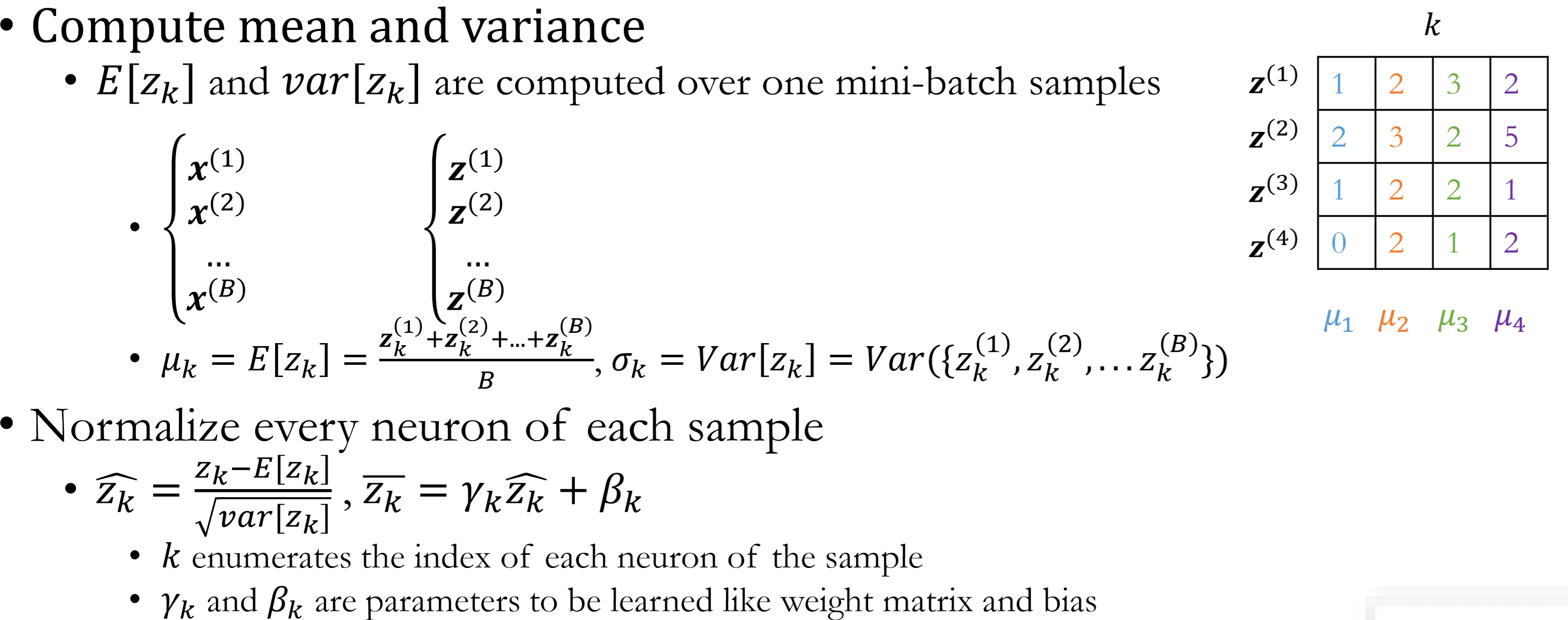

Inception V1 + Batch Normalization(BN), converge faster

After convolution / linear/ fully connected layer: $z=ReLU(BN(f(x)))$

Inreality: $z=BN(ReLU(f(x)))$

Inception V1 + Batch Normalization(BN), converge faster

After convolution / linear/ fully connected layer: $z=ReLU(BN(f(x)))$

Inreality: $z=BN(ReLU(f(x)))$